Login / Register

Flexible Data Placement (FDP) is a feature of the NVMe™ specification1 that has been proposed by Google and Meta. The purpose of this feature is to reduce write amplification (WA) when multiple applications are writing and modifying data on the same NVMe SSD. Benefits of reduced WA for these companies include increased usable capacity (due to requiring less over-provisioning) and potentially longer device life (due to reduced wear).

We proposed an experiment to determine how helpful FDP might be. In this test, we used a 7.68TB Micron® 7450 PRO SSD split into four equal (1.92TB) namespaces and executing parallel instances of the Aerospike NoSQL database. Aerospike is highly optimized for SSD usage and attempts to only write sequentially to the device. This gives a very low write amplification factor (WAF) for single instances, but when running multiple instances on the same physical device, these transactions get jumbled together causing randomness in the workload.

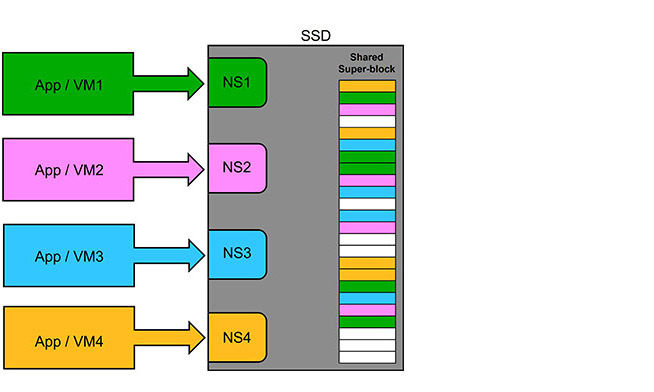

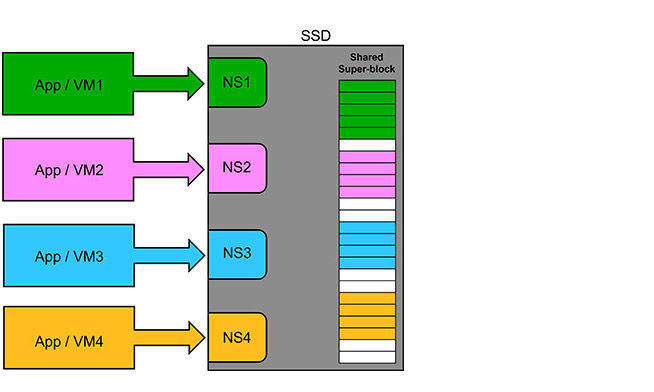

Each Aerospike instance was configured to write with varying block size (32KB, 64KB, 128KB, 256KB), to simulate different customers sharing space in a virtual environment. We also execute these workloads individually to 4x 1.92TB Micron 7450 PRO SSDs, which we imagine as the most optimal implementation of FDP where all application data receives dedicated NAND space, so data does not get interleaved on the device as seen in Figure 1.

Due to the optimizations of Aerospike, we expected to see a WA factor close to 1 when running a single instance of the application on its own dedicated storage device. This was confirmed by running YCSB workload A (50% read/50% update) until the drive was filled multiple times. In an ideal scenario, four namespaces on a large device would behave the same as four single devices. The layout of this scenario is seen in Figure 2.

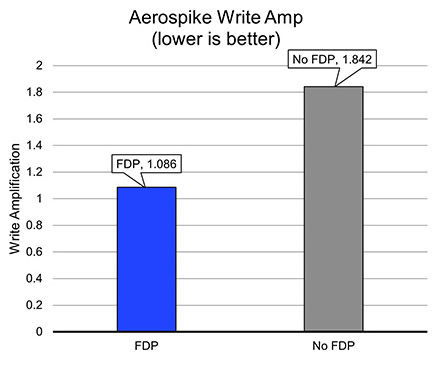

Without FDP and with multiple workloads executing on the 7.68TB device, the drive does not separate the NAND by namespace and has no way of determining which Aerospike data should be grouped together in context of the instances running. Interleaving means that the workload effectively becomes more random from a drive perspective, even though each individual workload is sequential, as seen in Figure 1. With this increase in randomness, we see a corresponding increase in WA to 1.84 for four instances co-located on a non-FDP device, up from 1.08 for a single instance on its own dedicated device, as seen in Figure 3.

While this is a basic experiment, it shows the potential benefits for FDP implementation in future devices. We can also see how some applications, which are designed to write sequentially as much as possible, would benefit from FDP when contending for the same drive resources.

1 See https://nvmexpress.org/wp-content/uploads/Hyperscale-Innovation-Flexible-Data-Placement-Mode-FDP.pdf for additional details provided in a presentation to NVMExpress by Chris Sabol (Google) and Ross Stenfort (Meta).